For this article, I am using DBCC SQLPERF(logspace) to get size information about the transaction logs. I hope you will find this post useful in helping to monitor the growth of your transaction logs.

For this article, I am using DBCC SQLPERF(logspace) to get size information about the transaction logs. I hope you will find this post useful in helping to monitor the growth of your transaction logs.

The idea here is to capture and store the growth information at set intervals. This can provide a way to report on how much the logs are growing by and when. Reports are then delivered by an automated process.

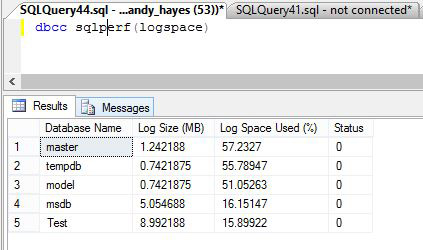

If you issue DBCC SQLPERF(logspace), you will see output similar to the following:

The output is self explanatory where for each database you can see the size of the transaction log and how much of it is in use currently.

So this is useful information on its own and even more useful if it can be captured on a regular basis and reported on.

So I wrote some T-SQL to do this and wrapped it up inside a stored procedure.

Firstly I will create a table to store the output of DBCC SQLPERF(logspace).

[sourcecode language=’sql’]

CREATE TABLE dbo.TransLogMonitor

(

LogID INT PRIMARY KEY IDENTITY(1,1),

LogDate DATETIME NOT NULL DEFAULT(GETDATE()),

DatabaseName VARCHAR(100) NOT NULL,

LogSizeMB DECIMAL(18, 2) NOT NULL,

LogSpaceUsed DECIMAL(18, 2) NOT NULL,

[Status] INT NOT NULL

)

CREATE INDEX IX_LogDate ON TransLogMonitor(LogDate);

[/sourcecode]

Now this is the bit where we capture the output.

[sourcecode language=’sql’]

INSERT INTO TransLogMonitor(DatabaseName, LogSizeMB, LogSpaceUsed, [Status])

EXEC (‘DBCC SQLPERF(logspace)’)

[/sourcecode]

DBCC execution completed. If DBCC printed error messages, contact your system administrator.

(5 row(s) affected)

Now run the code and then have a look at the data in your new table and it will look very similar to the output from running DBCC SQLPERF(logspace) to screen but with a couple of extra columns for the primary key values and the date that the data was inserted. The LogDate values are obviously very valuable enabling you to report on the data within any date range.

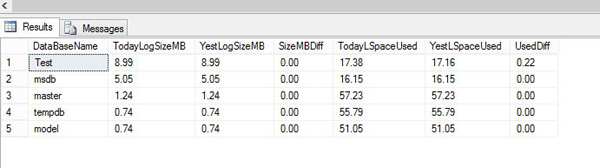

So lets say I wanted a report made available each day which gave me a comparison between yesterday’s and today’s growth, you could write something like this having the data collector running once a day.

[sourcecode language=’sql’]

DECLARE @Yesterday DATE = CONVERT(DATE, GETDATE()-1);

DECLARE @Today DATE = CONVERT(DATE, GETDATE());

SELECT COALESCE(Y.DatabaseName,T.DatabaseName) AS DatabaseName

, T.LogSizeMB AS TodayLogSizeMB

, Y.LogSizeMB AS YestLogSizeMB

, T.LogSizeMB – Y.LogSizeMB AS SizeMBDiff

, T.LogSpaceUsed AS TodayLSpaceUsed

, Y.LogSpaceUsed AS YestLSpaceUsed

, T.LogSpaceUsed – Y.LogSpaceUsed AS UsedDiff

FROM dbo.TransLogMonitor Y

FULL OUTER JOIN dbo.TransLogMonitor T ON T.DatabaseName = Y.DatabaseName

WHERE CONVERT(DATE, Y.LogDate) = @Yesterday AND CONVERT(DATE, T.LogDate) = @Today

ORDER BY TodayLogSizeMB DESC

[/sourcecode]

Returning this output:

Delivering the DBCC SQLPERF(logspace) report

Providing that you are running a version of SQL Server that is able to send email then you could deliver the report via this method. This example has attached the output as a text file but you can also format it up nicely as HTML if you want to.

[sourcecode language=’sql’]

EXEC msdb.dbo.sp_send_dbmail

@profile_name = ‘DB1’

, @recipients = ‘your smtp email address goes in here’

, @subject=’Transaction Log Space Consumption Report’

, @query = ‘EXEC DBName.dbo.usp_stored_procedure_name’

, @attach_query_result_as_file = 1

[/sourcecode]

To wrap this up….

Once you have the code written, it could be scheduled to your preference. As I mentioned, this example is to run daily and compares yesterday and today but you could run it hourly if you wanted to and then write some T-SQL to analyze the hours of the day when you get the most growth. You could write daily reports for the whole week, something to report on the month etc. It could be a great report to help with capacity planning.

In order to deliver this, you could send the report by scheduling with SQL Server Agent, an SSIS package, Reporting Services or SQLCMD called from a batch file using windows task scheduler.

It’s really up to you 🙂

References

DBCC SQLPERF – link to documentation is here

sp_send_dbmail – link to documentation is here

Hi, isn´t this necessary to run exactly before the translog backup to get accurate data?

Hi Magnus

Of course, instead of a daily summary, you can also run this more frequently and around your backup schedule to obtain statistics more aligned with changes to the size of and amount of free space in the log file. The numbers collected could be used to aggregate the growth over the course of the day for example.

Thanks for your comment, all the best.

Andy

Hi Andy,

Great article on DBCC SQLPERF(logspace). Just want to ask something as I’m new to SQL server so I don’t have much idea about same. When we execute DBCC SQLPERF(logspace) the last column in result is “Status”. What is this “status” all about? Thanks in advance!!!

Great Post DBCC SQLPERF seems very useful after reading this article, i have found another helpful post. see here: atdhebuja.wordpress.com/2015/12/01/how-to-view-sql-server-transaction-log-file/

Usefull thanks