Duplicate data is a common problem and in my experience comes in two main flavours; exact duplicate data and similar data. Exact duplicate data is when a data source contains the same values across all fields in the record. Similar data is when some of the fields match and to the naked eye can be classed as duplicate data but in fact it isn’t – it’s similar data and therefore it is unique.

Duplicate data is a common problem and in my experience comes in two main flavours; exact duplicate data and similar data. Exact duplicate data is when a data source contains the same values across all fields in the record. Similar data is when some of the fields match and to the naked eye can be classed as duplicate data but in fact it isn’t – it’s similar data and therefore it is unique.

In the case of similar data, an example might be where a file has been supplied which contains names and addresses. The file has been maintained in a spreadsheet and by more than one person. For whatever reason, the same name and address has been entered twice on two rows, except one editor did not include the postal code for the address. As a result, the rows are similar but not exact duplicates of each other. Apart from this, they are duplicate data.

Typically when loading data into a database table with unique constraints, de-duping the incoming data is a necessary step. For the purposes of this article, we will cover identifying and addressing exact duplicate data.

Lets imagine a scenario where you have been asked to de-duplicate a list of item codes and the data looks like this:

ItemCode

123456789

123456789

223344789

223344789

987554321

987554321

CREATE TABLE #ItemCodes

(

ItemCode VARCHAR(50)

);

INSERT INTO #ItemCodes

VALUES

('123456789'),

('123456789'),

('223344789'),

('223344789'),

('987554321'),

('987554321');

Identifying Duplicate Rows Using SQL

The first step is to find the duplicate data. This can be easily achieved using a simple select query in combination GROUP BY and HAVING. If we loaded this data into a staging table with no constraints, we could proceed to query the data like this:

SELECT ItemCode , COUNT(ItemCode) AS Duplicates FROM #ItemCodes GROUP BY ItemCode HAVING COUNT(ItemCode) > 1

The resulting output reduces our 6 records to 3 because the the HAVING clause only returns the records that have more than one instance of ItemCode occurring.

How to De-duplicate Duplicate Data

Now that we have identified the duplicate data, we can do something about it. We also know how many duplicates we are dealing with. In the instance of a 6 row table, it could be easy just to manually remove the rows 🙂 However these tasks are typically more complicated involving many more rows than this.

The first step is to make the data unique and the easiest way to do this is add some unique identity column to the data. If the duplicate data is already in a table in production, adding a unique identifier is still the way to go but this might mean exporting the data first to a new table, deleting it from the source table and then re-importing after de-duplication has happened.

If the data is being loaded into a staging table then it is easy enough to add a column for the unique identity.

ALTER TABLE #ItemCodes ADD ID INT IDENTITY(1,1) NOT NULL;

Querying the data now, it is unique:

SELECT * FROM #ItemCodes; ItemCode ID -------------------------------------------------- ----------- 123456789 1 123456789 2 223344789 3 223344789 4 987554321 5 987554321 6 (6 row(s) affected)

Lets now delete that duplicate data!

We’re ready to remove the duplicate data from our table. This is done using a DELETE statement and a self join, like this:

DELETE t1 FROM #ItemCodes t1 JOIN #ItemCodes t2 ON t1.ItemCode = t2.ItemCode AND t1.ID > t2.ID; (3 row(s) affected) SELECT * FROM #ItemCodes; ItemCode ID -------------------------------------------------- ----------- 123456789 1 223344789 3 987554321 5 (3 row(s) affected)

We’re now left with 3 rows, instead of 6. So our ItemCodes are now unique and can be loaded into our main database table. As you can see, ID’s 2, 4 and 6 were removed within the JOIN where t1.ID > t2.ID

Beware of lots of data and performance degeneration!

In an older post, I wrote about deleting rows from a table in a way which did not negatively effect performance too much. It’s worth a read as it illustrates what can happen if large transactions are issued in order to remove data. If you are dealing with large volumes of data, consider how to break the delete operation down into smaller parts to reduce the impact on your system if other users are querying the server.

Prevention is better than the cure!

Duplicate data can cost your business a lot of money in lost productivity time and incorrect results. Cleaning up the data is an expensive operation and can be ongoing until the source of the problem is addressed. Rather than continually repeating de-duplication tasks, communicate to the business the need to tackle the problem at the source.

As I referred to earlier, there are two forms of duplicate data, similar and exact. Preventing exact duplicate data means looking at your database and ensuring that the correct constraints are in place to prevent duplicate values. Such constraints being primary keys and unique column constraints.

When tackling similar duplicate data this can be a combination of ensuring that there are the appropriate constraints in place at both the database and application layers. For example if you have invested in a CRM system, whether written in-house of purchased off the shelf/cloud, make sure that it has sufficient duplicate detectors in place to at least warn the user that when entering a record, that there is a chance that the record is a duplicate.

If you have an application that has been written in-house for some function such as customer entry, ensure that there is address lookup functionality to help ensure that address information is entered in a clean way to help minimize the chances of mistyped address data, resulting in similar data.

Be proactive!

Similar duplicate data can creep into your system because it passes the application and database checks. I refer back to the example earlier in the post when a customer record existed twice, one with and one without a postal code. It’s important to realize early that a proactive approach to managing the duplicates can reap long term benefits, reducing overall cost to the business because the duplicates are addressed in a proactive way.

Some systems will provide reports which can help you identify duplicate data. Alternatively, you can run some SQL to identify duplicate rows in your tables and take the appropriate steps. Lets go back to our earlier example where we utilized the GROUP BY HAVING SQL but this time we will enhance it.

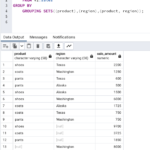

There is a table used in this query to contain items with descriptions, when they were added and by who. The SQL below shows the results of the GROUP BY and performs a JOIN back to the Items table to retrieve all fields for rows which have duplicate item codes. This helps provide a view of similar data with duplicate item codes.

CREATE TABLE Items ( ItemCode VARCHAR(50) , DateAdded DATETIME , Item VARCHAR(50) , AddedByUser VARCHAR(10) ); WITH Dupes AS (SELECT ItemCode , COUNT(ItemCode) AS Duplicates FROM Items GROUP BY ItemCode HAVING COUNT(ItemCode) > 1) SELECT * FROM Items JOIN Dupes ON Dupes.ItemCode = Items.ItemCode;

I hope you find this post useful. 🙂

When adding int identity is not an option (to many rows) there is a way with CTE and windows funtion that you like ;]

Common Table Expression is updateable. So you can just use partition to find dupes (sorting is not important in that case) and delete all rows, that have row_number > 1

CREATE TABLE #ItemCodes ( ItemCode VARCHAR(50) );

INSERT INTO #ItemCodes

VALUES ( ‘123456789’ ),

( ‘123456789’ ),

( ‘223344789’ ),

( ‘223344789’ ),

( ‘987554321’ ),

( ‘987554321’ );

WITH AllRows

AS ( SELECT rown = ROW_NUMBER() OVER ( PARTITION BY ItemCode ORDER BY ( SELECT

NULL

) ) ,

*

FROM #ItemCodes

)

DELETE FROM AllRows

WHERE rown > 1

Thank you Michal, nice solution! 😉